Agents 2.0: From Shallow Loops to Deep AgentsAgents 2.0:从浅循环到深层代理

October 12, 2025 2025年10月12日 5 minute read 阅读时间:5分钟

For the past year, building an AI agent usually meant one thing: setting up a while loop, take a user prompt, send it to an LLM, parse a tool call, execute the tool, send the result back, and repeat. This is what we call a Shallow Agent or Agent 1.0.

在过去的一年里,构建一个 AI 代理通常意味着一件事:设置一个 while 循环,接收用户指令,将其发送到 LLM,解析工具调用,执行工具,返回结果,然后重复。这就是我们所说的“浅层代理”或“代理 1.0”。

This architecture is fantastically simple for transactional tasks like “What’s the weather in Tokyo and what should I wear?”, but when asked to perform a task that requires 50 steps over three days, and they invariably get distracted, lose context, enter infinite loops, or hallucinates because the task requires too many steps for a single context window.

这种架构对于诸如“东京的天气如何,我应该穿什么?”之类的事务性任务来说非常简单,但是当被要求执行一项需要在三天内完成 50 个步骤的任务时,他们不可避免地会分心、失去上下文、进入无限循环或产生幻觉,因为该任务需要太多步骤而无法在一个上下文窗口中完成。

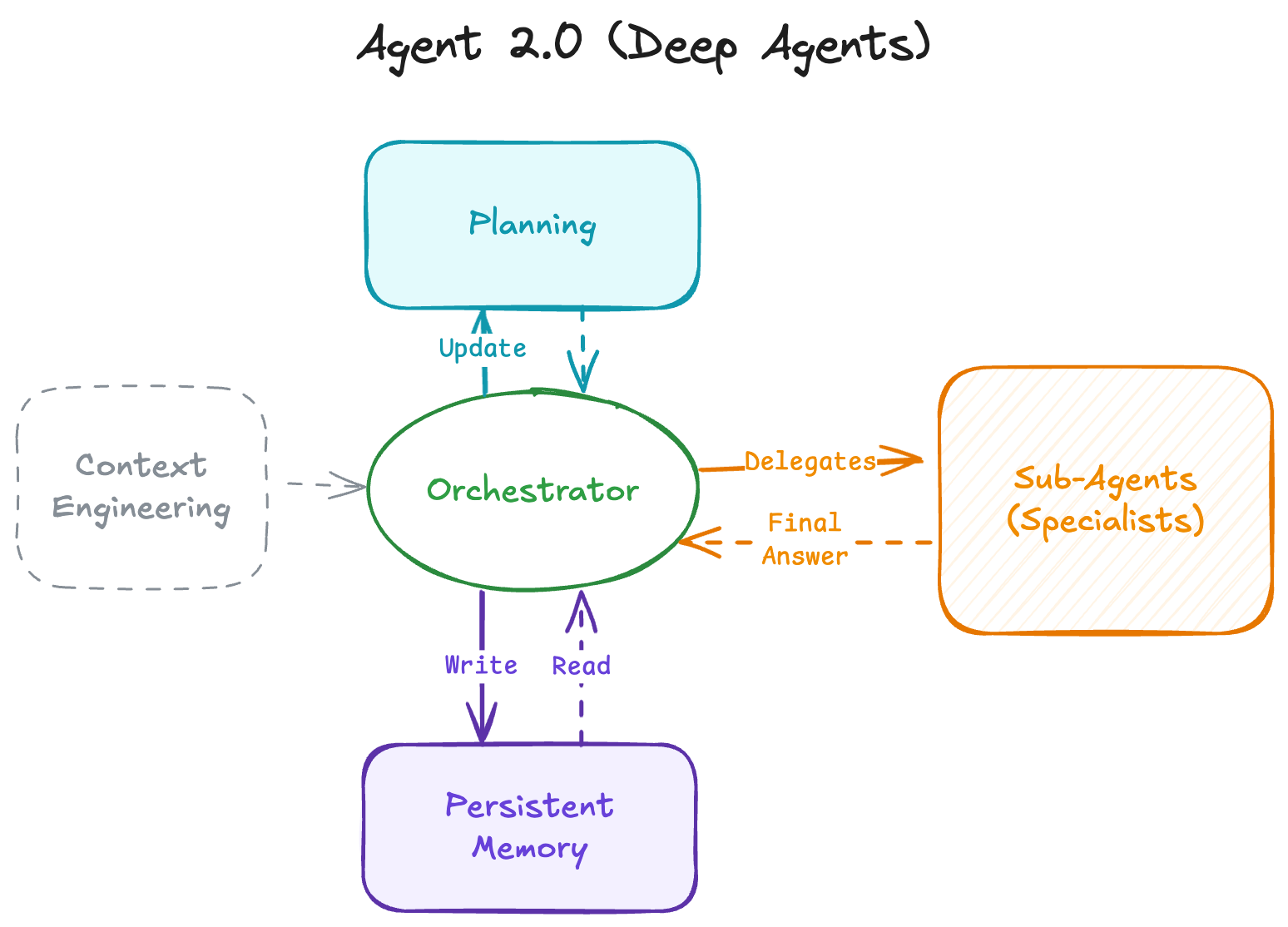

We are seeing an architectural shift towards Deep Agents or Agents 2.0. These systems do not just react in a loop. They combine agentic patterns to plan, manage a persistent memory/state, and delegate work to specialized sub-agents to solve multi-step, complex problems.

我们正在见证向 深度代理 或代理 2.0 的架构转变。这些系统不再只是循环响应。它们结合 代理模式 来规划、管理 持久内存/状态 ,并将工作委托给专门的 子代理 来解决多步骤的复杂问题。

Agents 1.0: The Limits of the “Shallow” LoopAgents 1.0:“浅层”循环的局限性

To understand where we are going, we must understand where we are. Most agents today are “shallow”. This means rely entirely on the LLM’s context window (conversation history) as their state.

要了解我们的目标,我们必须先了解我们的现状。如今大多数代理都比较“肤浅”。这意味着他们完全依赖 LLM 的上下文窗口(对话历史记录)作为自己的状态。

- User Prompt: “Find the price of Apple stock and tell me if it’s a good buy.”

用户提示: “查找苹果股票的价格并告诉我是否值得购买。” - LLM Reason: “I need to use a search tool.”

LLM 原因: “我需要使用搜索工具。” - Tool Call:

search("AAPL stock price")

工具调用:search("AAPL stock price") - Observation: The tool returns data.

观察: 该工具返回数据。 - LLM Answer: Generates a response based on the observation or calls another tool.

LLM 答案: 根据观察生成响应或调用另一个工具。 - Repeat: Loop until done.

重复: 循环直至完成。

This architecture is stateless and ephemeral. The agent’s entire “brain” is within the context window. When a task becomes complex, e.g. “Research 10 competitors, analyze their pricing models, build a comparison spreadsheet, and write a strategic summary” it will fail due to:

这种架构是无状态且短暂的。代理的整个“大脑”都位于上下文窗口内。当任务变得复杂时,例如“研究 10 个竞争对手,分析他们的定价模型,构建比较电子表格,并撰写战略摘要”,它会失败,原因如下:

- Context Overflow: The history fills up with tool outputs (HTML, messy data), pushing instructions out of the context window.

上下文溢出: 历史记录中充满了工具输出(HTML、混乱的数据),将指令推出了上下文窗口。 - Loss of Goal: Amidst the noise of intermediate steps, the agent forgets the original objective.

失去目标: 在中间步骤的噪音中,代理忘记了最初的目标。 - No Recovery mechanism: If it goes down a rabbit hole, it rarely has the foresight to stop, backtrack, and try a new approach.

没有恢复机制: 如果它掉进了兔子洞,它很少有先见之明来停下来、回溯并尝试新的方法。

Shallow agents are great at tasks that take 5-15 steps. They are terrible at tasks that take 500.

浅层代理擅长处理需要 5-15 步的任务,但对于需要 500 步的任务,它们表现很差。

The Architecture of Agents 2.0 (Deep Agents)Agents 2.0(深度代理)的架构

Deep Agents decouple planning from execution and manage memory external to the context window. The architecture consists of four pillars.

深度代理将规划与执行分离,并管理上下文窗口外部的内存。该架构由四大支柱组成。

Pillar 1: Explicit Planning支柱一:明确规划

Shallow agents plan implicitly via chain-of-thought (“I should do X, then Y”). Deep agents use tools to create and maintain an explicit plan, which can be To-Do list in a markdown document.

浅层代理通过思维链进行隐式规划(“我应该先做 X,然后做 Y”)。深层代理使用工具来创建和维护明确的计划,该计划可以作为 Markdown 文档中的待办事项列表。

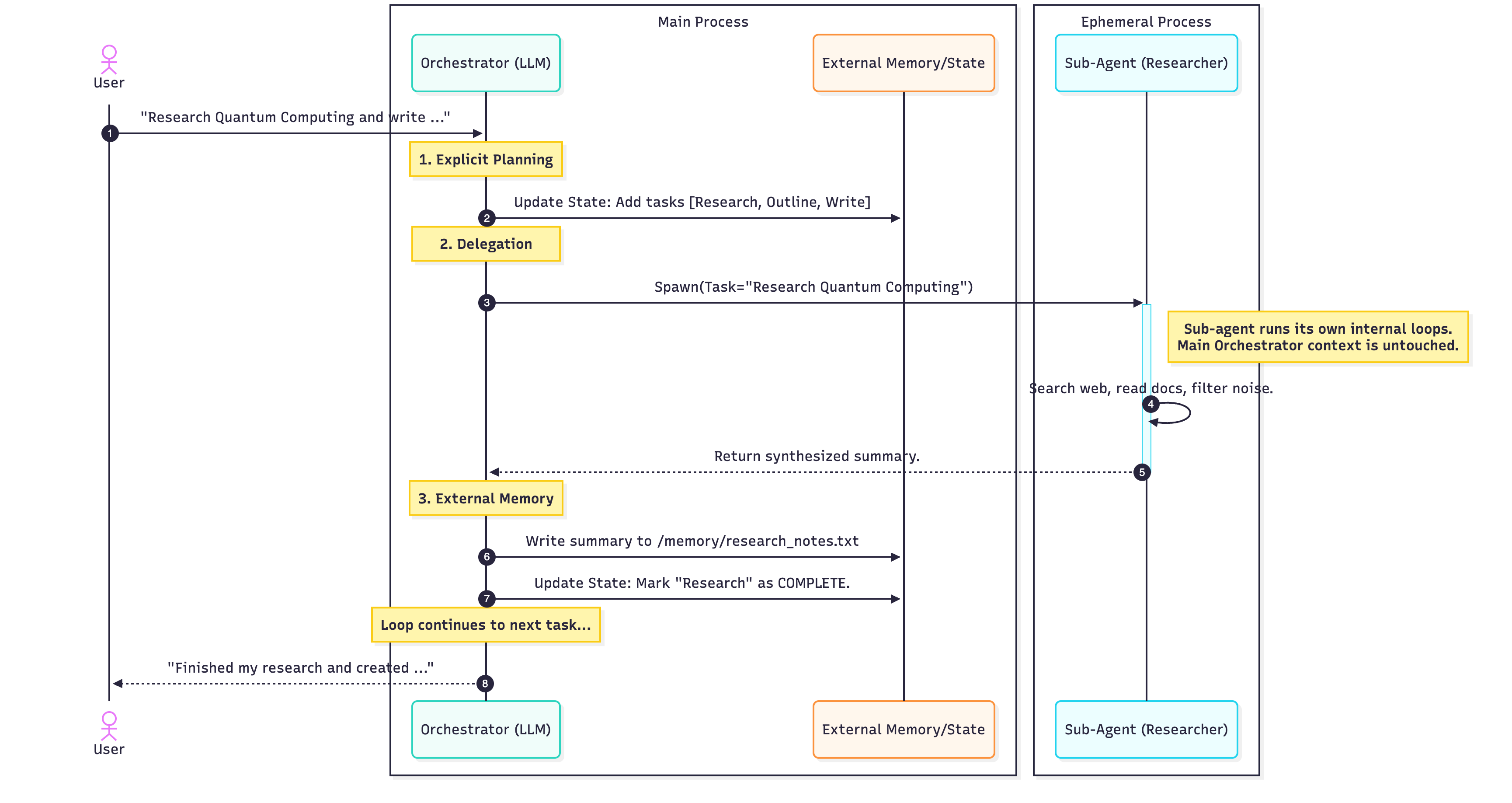

Between every step, the agent reviews and updates this plan, marking steps as pending, in_progress, or completed or add notes. If a step fails, it doesn’t just retry blindly, it updates the plan to accommodate the failure. This keeps the agent focused on the high-level task.

在每个步骤之间,代理都会审查并更新此计划,将步骤标记为待处理、进行中、已完成或添加注释。如果某个步骤失败,它不会盲目重试,而是会更新计划以适应失败。这使代理能够专注于高级任务。

Pillar 2: Hierarchical Delegation (Sub-Agents)支柱2:分层授权(子代理)

Complex tasks require specialization. Shallow Agents tries to be a jack-of-all-trades in one prompt. Deep Agents utilize an Orchestrator → Sub-Agent pattern.

复杂的任务需要专业化。浅层代理试图在一次操作中成为万事通。深层代理则采用协调器 → 子代理的模式。

The Orchestrator delegates task(s) to sub-agent(s) each with a clean context. The sub-agent (e.g., a “Researcher,” a “Coder,” a “Writer”) performs its tool call loops (searching, erroring, retrying), compiles the final answer, and returns only the synthesized answer to the Orchestrator.

协调器将任务委托给子代理,每个子 代理 都拥有清晰的上下文。 子代理 (例如“研究员”、“程序员”、“编写者”)执行其工具调用循环(搜索、出错、重试),编译最终答案,并 仅将 合成的答案返回给协调器。

Pillar 3: Persistent Memory支柱3:持久内存

To prevent context window overflow, Deep Agents utilize external memory sources, like filesystem or vector databases as their source of truth. Frameworks like Claude Code and Manus give agents read / write access to them. An agent writes intermediate results (code, draft text, raw data). Subsequent agents reference file paths or queries to only retrieve what is necessary. This shifts the paradigm from “remembering everything” to “knowing where to find information.”

为了防止上下文窗口溢出,深度代理利用外部存储源(例如文件系统或矢量数据库)作为其真实来源。Claude Code 和 Manus 等框架赋予代理对其的 read / write 权限。代理写入中间结果(代码、草稿文本、原始数据)。后续代理引用文件路径或查询,仅检索必要内容。这将范式从“记住一切”转变为“知道在哪里查找信息”。

Pillar 4: Extreme Context Engineering支柱四:极端情境工程

Smarter models do not require less prompting, they require better context. You cannot get Agent 2.0 behavior with a prompt that says, “You are a helpful AI.”. Deep Agents rely on highly detailed instructions sometimes thousands of tokens long. These define:

更智能的模型并非需要更少的提示,而是需要更好的上下文。你无法通过一句“你是一个乐于助人的人工智能”的提示来获得 Agent 2.0 的行为。深度代理依赖于非常详细的指令,有时长达数千个 token。这些指令定义了:

- Identifying when to stop and plan before acting.

确定何时停止并在行动之前制定计划。 - Protocols for when to spawn a sub-agent vs. doing work themselves.

关于何时生成子代理以及何时自行执行工作的协议。 - Tool definitions and examples on how and when to use.

工具定义以及如何以及何时使用的示例。 - Standards for file naming and directory structures.

文件命名和目录结构的标准。 - Strict formats for human-in-the-loop collaboration.

人机协作的严格格式。

Visualizing a Deep Agent Flow可视化深度代理流程

How do these pillars come together? Let’s look at a sequence diagram for a Deep Agent handling a complex request: “Research Quantum Computing and write a summary to a file.”

这些支柱是如何结合在一起的?让我们看一下深度代理处理复杂请求的序列图: “研究量子计算并将摘要写入文件。”

Conclusion 结论

Moving from Shallow Agents to Deep Agents (Agent 1.0 to Agent 2.0) isn’t just about connecting an LLM to more tools. It is a shift from reactive loops to proactive architecture. It is about better engineering around the model.

从浅层代理到深层代理(代理 1.0 到代理 2.0)的转变不仅仅是将法学硕士 (LLM) 连接到更多工具。这是一个从被动循环到主动架构的转变。它关乎围绕模型进行更优化的工程设计。

Implementing explicit planning, hierarchical delegation via sub-agents, and persistent memory, allow us to control the context and by controlling the context, we control the complexity, unlocking the ability to solve problems that take hours or days, not just seconds.

通过实施明确的规划、通过 子代理进行分层 委托以及 持久内存 ,我们可以控制上下文,通过控制上下文,我们可以控制复杂性,从而释放解决需要数小时或数天(而不仅仅是几秒钟)的问题的能力。

Acknowledgements 致谢

This overview was created with the help of deep and manual research. The term “Deep Agents” was notably popularized by the LangChain team to describe this architectural evolution.

本概述是在深入的人工研究的帮助下创建的。 “深度代理” 这一术语由 LangChain 团队推广,用于描述这一架构演变。

Thanks for reading! If you have any questions or feedback, please let me know on Twitter or LinkedIn.

感谢阅读!如果您有任何问题或反馈,请在 Twitter 或 LinkedIn 上告诉我。